Parents say ChatGPT encouraged son to kill himself

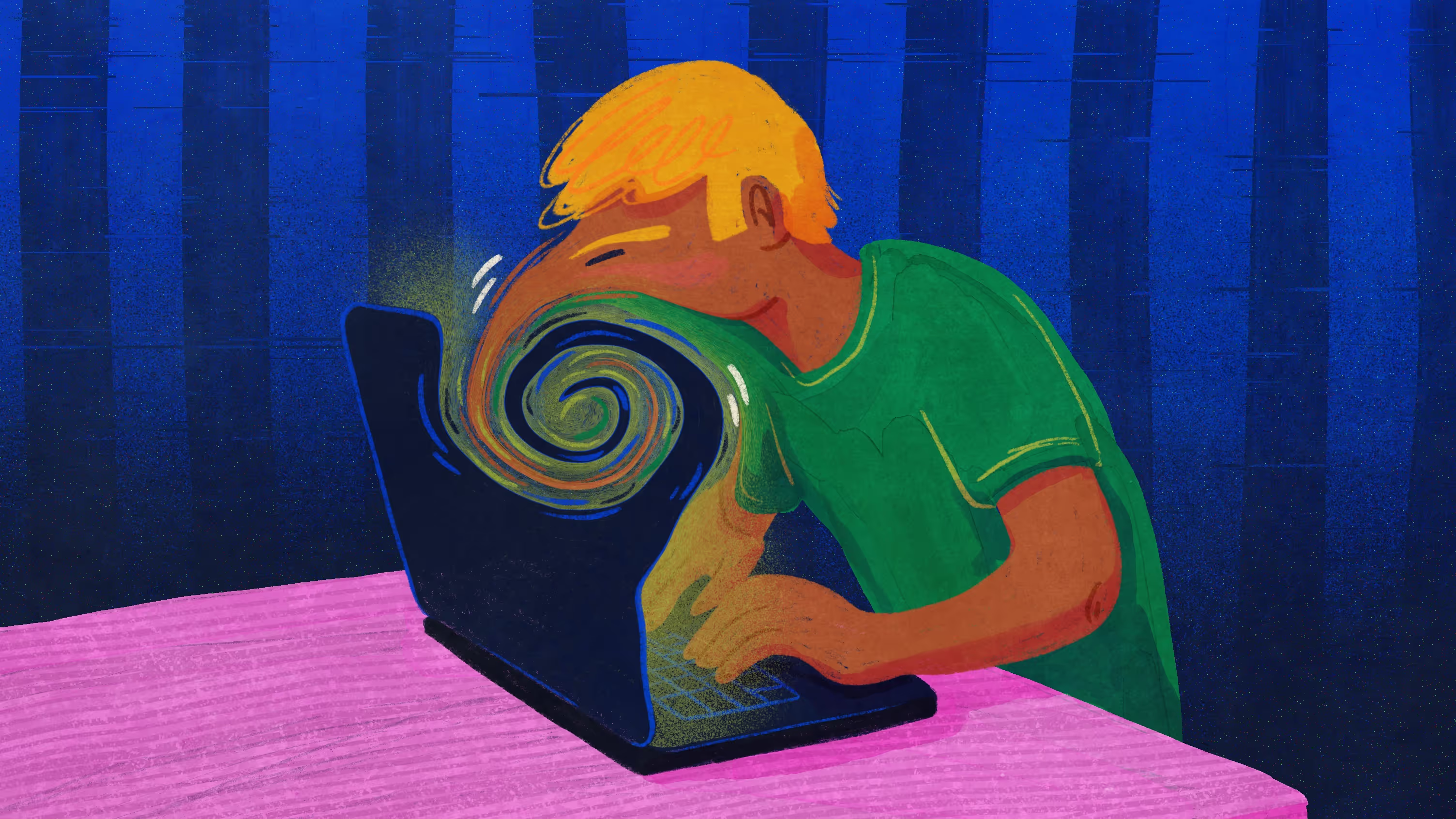

A recent CNN investigation uncovered a heartbreaking case: a 16-year-old had been chatting with an AI assistant about dark, emotional topics. What’s shocking is that none of the individual messages looked dangerous. If you read them one by one, the chatbot sounded supportive. But when investigators read the entire conversation, something terrifying became clear: the chatbot slowly normalized the teen’s suicidal thoughts.

Not by giving explicit instructions but by reassuring him, validating the idea, and gently nudging him toward a fatal decision. No single reply was bad enough to trigger a warning. But the pattern was unmistakable.

After studying this case, our team realized something crucial: danger doesn’t always appear in one message. Sometimes it builds slowly, reply by reply, in a way that looks harmless unless you step back and view the entire conversation.

This leads us to add a new layer of protection called Context Guard.

Here’s what it does:

• Analyzes the full conversation, not just individual replies

Context Guard reads the flow of the chat: how it begins, how it shifts, and where it leads to spot the kinds of gradual, sneaky patterns that single-message filters always miss.

• Detects escalating or repeated harmful cues

If an AI model subtly reassures harmful thoughts, normalizes risky behavior, or gently nudges a child in a dangerous direction, Context Guard recognizes the pattern early.

• Sends an alert as soon as a dangerous trend starts forming

Parents don’t have to wait for an obviously harmful message. Context Guard warns you when something unsafe is developing, not just when it has already happened.

• Designed to protect privacy

We don’t store full transcripts or monitor every word. Instead, our system detects patterns and risks within the conversation as a whole — giving parents awareness without overstepping boundaries.

Read the full CNN article here: You’re not rushing. You’re just ready: Parents say ChatGPT encouraged son to kill himself.