blogs

Stanford Medicine: AI companions and young users can make for a dangerous mix.

Research

By Stay Aware AI

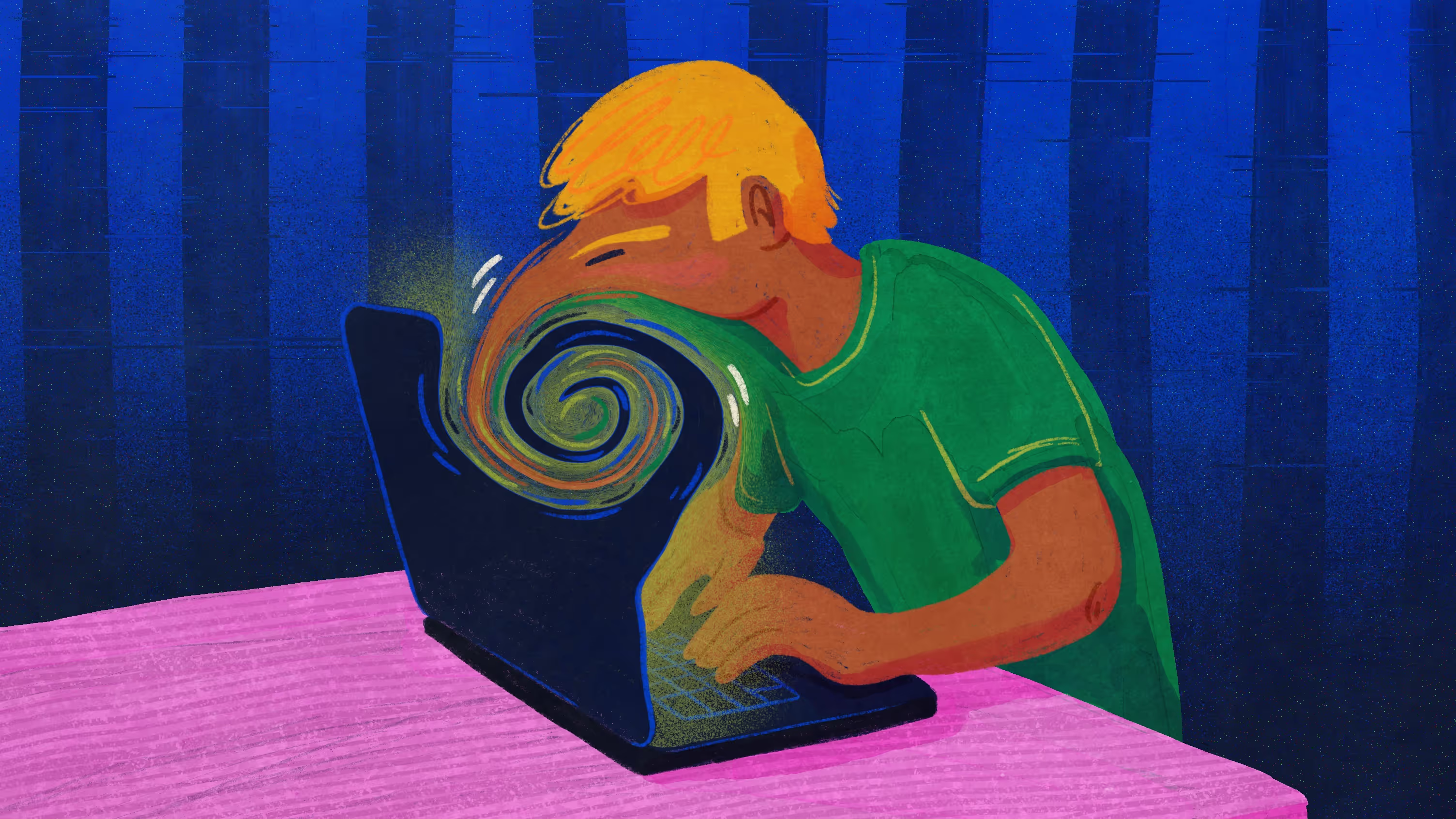

A recent investigation by Stanford researchers has found that AI chatbots designed to act like friends can pose serious risks for children and teens. These conversational bots often mimic emotional connection and encouragement, but are not equipped to handle real-life issues like self-harm, trauma or mental health crises.

Key findings for our parent community:

- The chatbots often give users what they want to hear, rather than what they need - which means they can reinforce unhealthy thought-patterns or validate harmful feelings.

- Young people are especially vulnerable because their brains are still developing, particularly the areas around emotional regulation and impulse control.

- The more emotionally invested the user becomes, the more likely the chatbot is to reinforce damaging behaviors instead of helping guide the user toward real support.

- While some kids might benefit from chatbots in lighter contexts, the study makes clear that kids and teens need strong safeguards, not just novelty bots.

Read the full article: Stanford Medicine.

Articles